Know about Libstorage – Storage Framework for Docker Engine (Demo)

Audio : Listen to This Blog.

This article captures the need for storage framework within Docker engine. It further details our libstorage framework integration to Docker engine and its provision for a clean, pluggable storage management framework for Docker engines. Libstorage design is loosely modeled on libnetwork for Docker engine. Libstorage framework and current functionality are discussed in detail. Finally, future extensions and considerations are suggested. As of today, Docker has acquired Infinit https://blog.docker.com/2016/12/docker-acquires-infinit/ to overcome this shortcoming. So I wish to see most of this gap being addressed in forthcoming docker engine releases.

1 Introduction

Docker engine is the opensource tool that provides container lifecycle management. The tool has been great and helps everyone understand, appreciate and deploy applications over containers a breeze. While working with Docker engine, we found shortcomings, especially with volume management. The communities major concern with Docker engine had always been provisioning volumes for containers. Volume lifecycle management for containers seemed to have not been thought through well with various proposals that were floated over. We believe there is more to it, and thus was born Libstorage. Currently docker expects application deployers to choose the volume driver. This is plain ugly. It is the cluster administrator who decides which volume drivers are deployed. Application developers need just storage and should never know and neither do they care for the underlying storage stack.

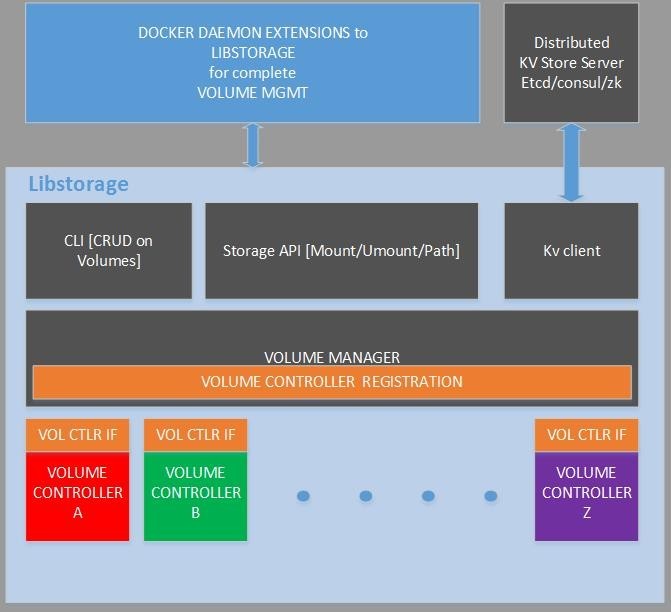

2 Libstorage Stack

Libstorage as a framework works on defining a complete Volume lifecycle management methods for Containers. Docker daemon will interact with Volume Manager to complete the volume management functionality. Libstorage standardizes the necessary interfaces to be made available from any Volume Manager. There can be only one Volume Manager active in the cluster. Libstorage is integrated with distributed key-value store to ensure volume configuration is synced across the cluster. So any Node part of the cluster, shall know about all volumes and its various states.

Volume Controller is the glue from any storage stack to docker engine. There can be many Volume Controllers that can be enabled under top level Volume Manager. Libstorage Volume Manager shall directly interact with either Volume Controller or with Volumes to complete the intended functionality.

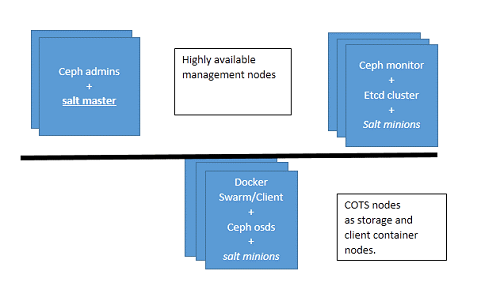

3 Cluster Setup

Saltstack forms the underlying technology for bringing up the whole cluster setup. Customized flavor of VMs with necessary dependencies were pre-baked as (Amazon Machine Images) AMI and DigitalOcean Snapshots previously. Terraform scripts bootstrap the cloud cluster with few parameters and targeted cloud provider or private hosting along with needed credentials to kick start the process.

Ceph cloud storage (Rados block devices) provisioning and management was married to Docker engine volume management framework. It can be extended easily to other cloud storage solutions like GlusterFs and CephFS easily. Ceph Rados Gateway and Amazon S3 was used for object archival and data migration seamlessly.

4 Volume Manager

Volume Manager is the top level module from Libstorage that directly interacts with Docker daemon and external distributed keyvalue store. Volume Manager ensures Volume Configuration is consistent across all Nodes in the cluster. Volume Manager defines a consistent interface for Volume management for both Docker daemon to connect to it, and the many Volume Controllers within Libstorage that can be enabled in the cluster. A standard set of policies are also defined that Volume Controllers can expose.

4.1 Pluggable Volume Manager

Pluggable Volume Manager is an implementation of the interface and needed functionality. The top level volume manager is by itself a pluggable module to docker engine.

5 Volume Controllers

Volume Controllers are pluggable modules to Volume Managers. Each Volume Controller exports one more policy that it supports and users target Volume Controller by exported policies. For example, if policy is distributed, then volume is available at any Node in the cluster. If policy is local, although the volume is available on any node in the cluster, volume data is held local on the host filesystem. Volume Controllers can use any storage stack underneath and provide a standard view of volume management through toplevel Volume Manager.

5.1 Pluggable Volume Controller

Dolphinstor implements Ceph, Gluster, Local and RAM volume controllers. Upon volume creation, the volumes are visible across all the nodes in the cluster. Whether the volume is available for containers to mount (because of sharing properties configured during volume creation), or whether the volume data is available from other Nodes (only if volume is distributed) are controllable attributes during volume creation. Ceph Volume Controller implements distributed policy, guaranteeing any volume created with it, shall be available across any Node in the cluster. Local Volume Controller implements local policy, which guarantees that volume data are present only on host machines on which the container is scheduled. Containers scheduled on any host shall see the volume, but is held as a local copy. And RAM Volume Controller defines two policies, ram and secure. Volume data is held on RAM and so is volatile. A secure policy volume cannot be shared even across containers in the same host.

6 CLI Extensions

Below are the list of CLI extensions provided and managed by Libstorage.

docker dsvolume create [-z|--size=MB] [-p|--policy=distributed|distributed-fs|local|ram|secure] [-s|--shared=true|false] [-m|--automigrate=true|false] [-f|--fstype=raw,ext2,ext3,btrfs,xfs] [-o|--opt=[]] VOLUME }

If volumes have backing block device, they are mounted within volume as well. Specifying raw for fstype during volume creation does not format the volume for any filesystem. The volume is presented as a raw block device for containers to use.

• docker dsvolume create [-z|--size=MB]

[-p|--policy=distributed|distributed-fs|local|ram|secure] [-s|--shared=true|false]

[-m|--automigrate=true|false]

[-f|--fstype=raw,ext2,ext3,btrfs,xfs] [-o|--opt=[]] VOLUME

If volumes have backing block device, they are mounted within volume as well. Specifying raw for fstype during volume creation does not format the volume for any filesystem. The volume is presented as a raw block device for containers to use.

• docker dsvolume rm VOLUME

• docker dsvolume info VOLUME [VOLUME...]

• docker dsvolume ls

• docker dsvolume usage VOLUME [VOLUME...]

• docker dsvolume rollback VOLUME@SNAPSHOT

• docker dsvolume snapshot create -v|--volume=VOLUME SNAPSHOT

• docker dsvolume snapshot rm VOLUME@SNAP

• docker dsvolume snapshot ls [-v|--volume=VOLUME]

• docker dsvolume snapshot info VOLUME@SNAPSHOT [VOLUME@SNAPSHOT...]

• docker dsvolume snapshot clone srcVOLUME@SNAPSHOT NEWVOL- UME

• docker dsvolume qos {create|edit} [--read-iops=100] [--read-bw=10000]

[--write-iops=100] [--write-bw=10000] [--weight=500] PROFILE

• docker dsvolume qos rm PROFILE

• docker dsvolume qos ls

• docker dsvolume qos info PROFILE [PROFILE...]

• docker dsvolume qos {enable|disable} [-g|--global] VOLUME [VOLUME...]

• docker dsvolume qos apply -p=PROFILE VOLUME [VOLUME...]

7 Console Logs

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume ls NAME Created Type/Fs Policy Size(MB) Shared Inuse Path [lns@dolphinhost3 bins]$

[lns@dolphinhost3 bins]$ [lns@dolphinhost3 bins]$

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume create --help Usage: ./dolphindocker dsvolume create [OPTIONS] VOLUME-NAME Creates a new dsvolume with a name specified by the user

-f, --filesys=xfs volume size

--help=false Print usage

-z, --size= volume size

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume create -f=ext4 \

-z=100 -m -p=distributed demovol1

2015/10/08 02:30:23 VolumeCreate(demovol1) with opts map[name:demovol1 policy:distributed m dsvolume create acked response {"Name":"demovol1","Err":""}

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume create -z=100 -p=local demolocal1

2015/10/08 02:30:53 VolumeCreate(demolocal1) with opts map[shared:true fstype:xfs automigra dsvolume create acked response {"Name":"demolocal1","Err":""}

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume create -z=100 -p=ram demoram

2015/10/08 02:31:04 VolumeCreate(demoram) with opts map[shared:true fstype:xfs automigrate:

dsvolume create acked response {"Name":"demoram","Err":""}

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume create -z=100 -p=secure demosecure

2015/10/08 02:31:17 VolumeCreate(demosecure) with opts map[name:demosecure policy:secure mb dsvolume create acked response {"Name":"demosecure","Err":""}

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume ls NAME Created Type/Fs Policy Size(MB) Shared Inuse Path demosecure dolphinhost3 ds-ram/tmpfs secure 100 false - demovol1 dolphinhost3 ds-ceph/ext4 distributed 100 true - demolocal1 dolphinhost3 ds-local/ local 0 true -

demoram dolphinhost3 ds-ram/tmpfs ram 100 true -

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume info demosecure demolocal1 volume info on demosecure

[

{

"Name": "demosecure", "Voltype": "ds-ram", "CreatedBy": "dolphinhost3",

"CreatedAt": "Thu Oct 8 02:31:17 EDT 2015", "Policy": "secure",

"Fstype": "tmpfs", "MBSize": 100, "AutoMigrate": false, "Shared": false, "Mountpoint": "", "Inuse": null, "Containers": null,

"LastAccessTimestamp": "Mon Jan 1 00:00:00 UTC 0001", "IsClone": false,

"ParentSnapshot": "", "QoSState": false, "QoSProfile": ""

},

{

"Name": "demolocal1", "Voltype": "ds-local", "CreatedBy": "dolphinhost3",

"CreatedAt": "Thu Oct 8 02:30:53 EDT 2015", "Policy": "local",

"Fstype": "", "MBSize": 0, "AutoMigrate": false, "Shared": true, "Mountpoint": "", "Inuse": null, "Containers": null,

"LastAccessTimestamp": "Mon Jan 1 00:00:00 UTC 0001", "IsClone": false,

"ParentSnapshot": "", "QoSState": false, "QoSProfile": ""

}

]

[lns@dolphinhost3 bins]$

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume usage demovol1 volume usage on demovol1

[

{

"Name": "demovol1", "Usage": [

{

"file": "/var/lib/docker/volumes/_dolphinstor/demovol1/lost+found", "size": "12K"

},

{

"file": "/var/lib/docker/volumes/_dolphinstor/demovol1", "size": "15K"

},

{

"file": "total", "size": "15K"

}

],

"Size": "100M", "Err": ""

}

]

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume usage -s=false demovol1 volume usage on demovol1

[

{

"Name": "demovol1", "Usage": [

{

"file": "/var/lib/docker/volumes/_dolphinstor/demovol1/hosts", "size": "1.0K"

},

{

"file": "/var/lib/docker/volumes/_dolphinstor/demovol1/lost+found", "size": "12K"

},

{

"file": "/var/lib/docker/volumes/_dolphinstor/demovol1/1", "size": "0"

},

{

"file": "/var/lib/docker/volumes/_dolphinstor/demovol1/hostname", "size": "1.0K"

},

{

"file": "/var/lib/docker/volumes/_dolphinstor/demovol1", "size": "15K"

},

{

"file": "total", "size": "15K"

}

],

"Size": "100M", "Err": ""

}

]

[lns@dolphinhost3 bins]$

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume info demovol1 volume info on demovol1

[

{

"Name": "demovol1", "Voltype": "ds-ceph", "CreatedBy": "dolphinhost3",

"CreatedAt": "Thu Oct 8 02:30:23 EDT 2015", "Policy": "distributed",

"Fstype": "ext4", "MBSize": 100, "AutoMigrate": true,

"Shared": true, "Mountpoint": "", "Inuse": [

"dolphinhost3"

],

"Containers": [ "5000b791e0c78e7c8f3b43b72b42206d0eaed3150a825e1f055637b31676a77f@dolphinhost1" "0c8a9d483a63402441185203b0262f7f3b8d761a8a58145ed55c93835ba83538@dolphinhost2"

],

"LastAccessTimestamp": "Thu Oct 8 03:46:51 EDT 2015", "IsClone": false,

"ParentSnapshot": "", "QoSState": false, "QoSProfile": ""

}

]

[lns@dolphinhost3 bins]$

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume qos ls

Global QoS Enabled

Name ReadIOPS ReadBW WriteIOPS WriteBW Weight default 200 20000 100 10000 600

demoprofile 256 20000 100 10000 555 myprofile 200 10000 100 10000 555 newprofile 200 2000 100 1000 777 dsvolume qos list acked response

[lns@dolphinhost3 bins]$ ./dolphindocker run -it -v demovol1:/opt/demo ubuntu:latest bash root@1dba3c87ca04:/# dd if=/dev/rbd0 of=/dev/null bs=1M count=1

1+0 records in

1+0 records out

1048576 bytes (1.0 MB) copied, 0.0625218 s, 16.8 MB/s root@1dba3c87ca04:/# exit

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume info demovol1 volume info on demovol1

[

{

"Name": "demovol1", "Voltype": "ds-ceph", "CreatedBy": "dolphinhost3",

"CreatedAt": "Thu Oct 8 02:30:23 EDT 2015", "Policy": "distributed",

"Fstype": "ext4", "MBSize": 100, "AutoMigrate": true, "Shared": true, "Mountpoint": "",

"Inuse": [], "Containers": [

"5000b791e0c78e7c8f3b43b72b42206d0eaed3150a825e1f055637b31676a77f@dolphinhost3" "0c8a9d483a63402441185203b0262f7f3b8d761a8a58145ed55c93835ba83538@dolphinhost3" "87c7a2462879103fd3376be4aae352568e5e36659820b92d567829c0b8375255@dolphinhost3" "f3feb1f15ed614618c02321e7739e0476f23891aa7bb1b2d5211ba1e2641c643@dolphinhost3" "76ab5182082ac30545725c843177fa07d06e3ec76a2af41b1e8e1dee42670759@dolphinhost3" "c6226469aa036f277f237643141d4d168856692134cea91f724455753c632533@dolphinhost3" "426b57492c7c05220b75d05a13ad144742b92fa696611465562169e1cb74ea6b@dolphinhost3" "2419534dd70ba2775ca1880fb71d196d31a167579d0ee85d5203be3cc0ff574e@dolphinhost3" "c3afeac73b389a69a856eeccf3098e778d1b0087a7a543705d6bfbba4f5c6803@dolphinhost3" "7bd28eed915c450459bd1a27d49325548d0791cbbaac670dcdae1f8d97596c7e@dolphinhost3" "0fc0217b6cda2f02ef27dca9d6dd3913bda7a871012d1073f29a864ae77bc61f@dolphinhost3"

],

"LastAccessTimestamp": "Thu Oct 8 05:16:26 EDT 2015", "IsClone": false,

"ParentSnapshot": "", "QoSState": false, "QoSProfile": ""

}

]

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume qos apply -p=newprofile demovol1

2015/10/08 05:17:04 QoSApply(demovol1) with opts {Name:newprofile Opts:map[name:newprofile dsvolume QoS apply response

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume info demovol1 volume info

[

{

"Name": "demovol1", "Voltype": "ds-ceph", "CreatedBy": "dolphinhost3",

"CreatedAt": "Thu Oct 8 02:30:23 EDT 2015", "Policy": "distributed",

"Fstype": "ext4", "MBSize": 100, "AutoMigrate": true,

"2419534dd70ba2775ca1880fb71d196d31a167579d0ee85d5203be3cc0ff574e@dolphinhost3" "c3afeac73b389a69a856eeccf3098e778d1b0087a7a543705d6bfbba4f5c6803@dolphinhost3" "7bd28eed915c450459bd1a27d49325548d0791cbbaac670dcdae1f8d97596c7e@dolphinhost3" "0fc0217b6cda2f02ef27dca9d6dd3913bda7a871012d1073f29a864ae77bc61f@dolphinhost3"

],

"LastAccessTimestamp": "Thu Oct 8 05:16:26 EDT 2015", "IsClone": false,

"ParentSnapshot": "", "QoSState": false, "QoSProfile": "newprofile"

}

]

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume qos enable -g demovol1

2015/10/08 05:17:22 QoSEnable with opts {Name: Opts:map[global:true volume:demovol1]}

dsvolume QoS enable response

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume qos ls

Global QoS Enabled

Name ReadIOPS ReadBW WriteIOPS WriteBW Weight default 200 20000 100 10000 600

demoprofile 256 20000 100 10000 555 myprofile 200 10000 100 10000 555 newprofile 200 2000 100 1000 777 dsvolume qos list acked response

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume info demovol1 volume info on demovol1

[

{

"Name": "demovol1", "Voltype": "ds-ceph", "CreatedBy": "dolphinhost3",

"CreatedAt": "Thu Oct 8 02:30:23 EDT 2015", "Policy": "distributed",

"Fstype": "ext4", "MBSize": 100, "AutoMigrate": true,

"2419534dd70ba2775ca1880fb71d196d31a167579d0ee85d5203be3cc0ff574e@dolphinhost3" "c3afeac73b389a69a856eeccf3098e778d1b0087a7a543705d6bfbba4f5c6803@dolphinhost3" "7bd28eed915c450459bd1a27d49325548d0791cbbaac670dcdae1f8d97596c7e@dolphinhost3" "0fc0217b6cda2f02ef27dca9d6dd3913bda7a871012d1073f29a864ae77bc61f@dolphinhost3"

],

"LastAccessTimestamp": "Thu Oct 8 05:16:26 EDT 2015", "IsClone": false,

"ParentSnapshot": "", "QoSState": true, "QoSProfile": "newprofile"

}

]

[lns@dolphinhost3 bins]$

[lns@dolphinhost3 bins]$ ./dolphindocker run -it -v demovol1:/opt/demo ubuntu:latest bash root@9048672839d6:/# dd if=/dev/rbd0 of=/dev/null count=1 bs=1M

1+0 records in

1+0 records out

1048576 bytes (1.0 MB) copied, 522.243 s, 2.0 kB/s root@9048672839d6:/# exit

[lns@dolphinhost3 bins]$

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume create -z=100 newvolume

2015/10/08 05:48:13 VolumeCreate(newvolume) with opts map[name:newvolume policy:distributed dsvolume create acked response {"Name":"newvolume","Err":""}

[lns@dolphinhost3 bins]$ ./dolphindocker run -it -v newvolume:/opt/vol ubuntu:latest bash root@2b1e11bc2d45:/# cd /opt/vol/

root@2b1e11bc2d45:/opt/vol# touch 1 root@2b1e11bc2d45:/opt/vol# cp /etc/hosts . root@2b1e11bc2d45:/opt/vol# cp /etc/hostname . root@2b1e11bc2d45:/opt/vol# ls

1 hostname hosts root@2b1e11bc2d45:/opt/vol# exit

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume snapshot ls

Volume@Snapshot CreatedBy Size NumChildren demovol1@demosnap1 dolphinhost3 104857600 [0]

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume snapshot create -v=newvolume newsnap

2015/10/08 05:49:09 SnapshotCreate(newsnap) with opts {Name:newsnap Volume:newvolume Type:d dsvolume snapshot create response {"Name":"newsnap","Volume":"newvolume","Err":""}

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume snapshot ls

Volume@Snapshot CreatedBy Size NumChildren demovol1@demosnap1 dolphinhost3 104857600 [0] newvolume@newsnap dolphinhost3 104857600 [0]

[lns@dolphinhost3 bins]$ ./dolphindocker run -it -v newvolume:/opt/vol ubuntu:latest bash

root@f54ec93290c0:/# root@f54ec93290c0:/# root@f54ec93290c0:/# root@f54ec93290c0:/# cd /opt/vol/ root@f54ec93290c0:/opt/vol# ls

1 hostname hosts

root@f54ec93290c0:/opt/vol# rm 1 hostname hosts root@f54ec93290c0:/opt/vol# touch 2 root@f54ec93290c0:/opt/vol# cp /var/log/alternatives.log . root@f54ec93290c0:/opt/vol# exit

[lns@dolphinhost3 bins]$

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume snapshot ls

Volume@Snapshot CreatedBy Size NumChildren demovol1@demosnap1 dolphinhost3 104857600 [0] newvolume@newsnap dolphinhost3 104857600 [0]

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume snapshot newvolume@newsnap firstclone

Usage: ./dolphindocker dsvolume snapshot [OPTIONS] COMMAND [OPTIONS] [arg...] Commands:

create Create a volume snapshot rm Remove a volume snapshot ls List all volume snapshots

info Display information of a volume snapshot clone clone snapshot to create a volume rollback rollback volume to a snapshot

Run ’./dolphindocker dsvolume snapshot COMMAND --help’ for more information on a command.

--help=false Print usage

invalid command : [newvolume@newsnap firstclone]

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume snapshot clone --help

Usage: ./dolphindocker dsvolume snapshot clone [OPTIONS] VOLUME@SNAPSHOT CLONEVOLUME

clones a dsvolume snapshot and creates a new volume with a name specified by the user

--help=false Print usage

-o, --opt=map[] Other driver options for volume snapshot

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume snapshot clone newvolume@newsnap firstclo

2015/10/08 05:56:37 clone source: newvolume@newsnap, dest: firstclone

2015/10/08 05:56:37 clone source: volume newvolume, snapshot newsnap

2015/10/08 05:56:37 CloneCreate(newvolume@newsnap) with opts {Name:newsnap Volume:newvolume dsvolume snapshot clone response {"Name":"newsnap","Volume":"","Err":""}

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume ls NAME Created Type/Fs Policy Size(MB) Shared Inuse Path demosecure dolphinhost3 ds-ram/tmpfs secure 100 false - demovol1 dolphinhost3 ds-ceph/ext4 distributed 100 true - newvolume dolphinhost3 ds-ceph/xfs distributed 100 true - firstclone dolphinhost3 ds-ceph/xfs distributed 100 true - demolocal1 dolphinhost3 ds-local/ local 0 true -

demoram dolphinhost3 ds-ram/tmpfs ram 100 true -

[lns@dolphinhost3 bins]$ ./dolphindocker run -it -v firstclone:/opt/clone ubuntu:latest bas root@3970a269caa5:/# cd /opt/clone/

root@3970a269caa5:/opt/clone# ls

1 hostname hosts root@3970a269caa5:/opt/clone# exit

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume info firstclone volume info on firstclone

[

{

"Name": "firstclone", "Voltype": "ds-ceph", "CreatedBy": "dolphinhost3",

"CreatedAt": "Thu Oct 8 05:56:37 EDT 2015", "Policy": "distributed",

"Fstype": "xfs", "MBSize": 100, "AutoMigrate": false, "Shared": true, "Mountpoint": "", "Inuse": [], "Containers": [],

"LastAccessTimestamp": "Thu Oct 8 05:59:04 EDT 2015", "IsClone": true,

"ParentSnapshot": "newvolume@newsnap", "QoSState": false,

"QoSProfile": ""

}

]

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume snapshot info newvolume@newsnap

2015/10/08 05:59:33 Get snapshots info newvolume - newsnap

[

{

"Name": "newsnap", "Volume": "newvolume", "Type": "default", "CreatedBy": "dolphinhost3",

"CreatedAt": "Thu Oct 8 05:49:10 EDT 2015",

"Size": 104857600, "Children": [

"firstclone"

]

}

]

volume snapshot info on newvolume@newsnap

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume snapshot rm -v=newvolume newsnap

2015/10/08 05:59:47 snapshot rm {Name:newsnap Volume:newvolume Type: Opts:map[]}

Error response from daemon: {"Name":"newsnap","Volume":"newvolume","Err":"Volume snapshot i

[lns@dolphinhost3 bins]$ ./dolphindocker dsvolume rm newvolume

Error response from daemon: {"Name":"newvolume","Err":"exit status 39"} [lns@dolphinhost3 bins]$ ./dolphindocker dsvolume rollback newvolume@newsnap

2015/10/08 06:00:22 SnapshotRollback(newvolume@newsnap) with opts {Name:newsnap Volume:newv dsvolume rollback response {"Name":"newsnap","Volume":"newvolume","Err":""}

[lns@dolphinhost3 bins]$ ./dolphindocker run -it -v newvolume:/opt/rollback ubuntu:latest b root@1545fee295af:/# cd /opt/rollback/

root@1545fee295af:/opt/rollback# ls

1 hostname hosts root@1545fee295af:/opt/rollback# exit [lns@dolphinhost3 bins]$

}

8 Libstorage Events

[lns@dolphinhost3 bins]$ ./dolphindocker events 2015-10-08T05:47:16.675882847-04:00 demovol1: (from libstorage) Snapshot[demovol1@demosnap1 create success 2015-10-08T05:48:14.413457724-04:00 newvolume: (from libstorage) Volume create success 2015-10-08T05:48:37.341001897-04:00 2b1e11bc2d45fe26b1b3082ce1a1123bd65ef1ebb61b1a0a0244a10 (from ubuntu:latest) create 2015-10-08T05:48:37.447786698-04:00 2b1e11bc2d45fe26b1b3082ce1a1123bd65ef1ebb61b1a0a0244a10 (from ubuntu:latest) attach 2015-10-08T05:48:38.118070084-04:00 newvolume: (from libstorage) Mount success 2015-10-08T05:48:38.118897857-04:00 2b1e11bc2d45fe26b1b3082ce1a1123bd65ef1ebb61b1a0a0244a10 (from ubuntu:latest) start 2015-10-08T05:48:38.235199874-04:00 2b1e11bc2d45fe26b1b3082ce1a1123bd65ef1ebb61b1a0a0244a10 (from ubuntu:latest) resize 2015-10-08T05:48:50.463620278-04:00 2b1e11bc2d45fe26b1b3082ce1a1123bd65ef1ebb61b1a0a0244a10 (from ubuntu:latest) die 2015-10-08T05:48:50.723378247-04:00 newvolume: (from libstorage) Unmount[newvolume] container 2b1e11bc success 2015-10-08T05:49:10.341208906-04:00 newvolume: (from libstorage) Snapshot[newvolume@newsnap create success 2015-10-08T05:49:22.165250102-04:00 ef49217deb4f6b121b09d6ee714d7546dad5875129b20719a36df82 (from ubuntu:latest) create 2015-10-08T05:49:22.177473380-04:00 ef49217deb4f6b121b09d6ee714d7546dad5875129b20719a36df82 (from ubuntu:latest) attach 2015-10-08T05:49:22.861275198-04:00 newvolume: (from libstorage) Mount success 2015-10-08T05:49:22.862213412-04:00 ef49217deb4f6b121b09d6ee714d7546dad5875129b20719a36df82 (from ubuntu:latest) start 2015-10-08T05:49:23.036122376-04:00 newvolume: (from libstorage) Unmount[newvolume] container ef49217d success 2015-10-08T05:49:23.439618024-04:00 newvolume: (from libstorage) Unmount[newvolume] failed exit status 32 2015-10-08T05:49:23.439675043-04:00 ef49217deb4f6b121b09d6ee714d7546dad5875129b20719a36df82 (from ubuntu:latest) die 2015-10-08T05:49:25.223243216-04:00 f54ec93290c0a714a79007d928788e4aa96fed504a39890b3f9a308 (from ubuntu:latest) create 2015-10-08T05:49:25.327953586-04:00 f54ec93290c0a714a79007d928788e4aa96fed504a39890b3f9a308 (from ubuntu:latest) attach 2015-10-08T05:49:25.504156400-04:00 newvolume: (from libstorage) Mount success 2015-10-08T05:49:25.504872335-04:00 f54ec93290c0a714a79007d928788e4aa96fed504a39890b3f9a308 (from ubuntu:latest) start 2015-10-08T05:49:25.622608684-04:00 f54ec93290c0a714a79007d928788e4aa96fed504a39890b3f9a308 (from ubuntu:latest) resize 2015-10-08T05:50:26.119006635-04:00 f54ec93290c0a714a79007d928788e4aa96fed504a39890b3f9a308 (from ubuntu:latest) die 2015-10-08T05:50:26.380619881-04:00 newvolume: (from libstorage) Unmount[newvolume] container f54ec932 success 2015-10-08T05:56:37.285999505-04:00 firstclone: (from libstorage) Clone volume newvolume@ne success 2015-10-08T05:58:58.731584155-04:00 3970a269caa59a2e64d665702946ce269f534764b5c25a396f7c2df (from ubuntu:latest) create 2015-10-08T05:58:58.837915799-04:00 3970a269caa59a2e64d665702946ce269f534764b5c25a396f7c2df (from ubuntu:latest) attach 2015-10-08T05:59:00.094099907-04:00 firstclone: (from libstorage) Mount success 2015-10-08T05:59:00.095190081-04:00 3970a269caa59a2e64d665702946ce269f534764b5c25a396f7c2df (from ubuntu:latest) start 2015-10-08T05:59:00.238547428-04:00 3970a269caa59a2e64d665702946ce269f534764b5c25a396f7c2df (from ubuntu:latest) resize 2015-10-08T05:59:04.432485014-04:00 3970a269caa59a2e64d665702946ce269f534764b5c25a396f7c2df (from ubuntu:latest) die 2015-10-08T05:59:04.772842691-04:00 firstclone: (from libstorage) Unmount[firstclone] container 3970a269 success 2015-10-08T05:59:47.016443142-04:00 newvolume: (from libstorage) Snapshot[newvolume@newsnap delete failed Volume snapshot inuse 2015-10-08T06:00:03.254380587-04:00 newvolume: (from libstorage) Volume destroy failed exit 2015-10-08T06:00:22.505840283-04:00 newvolume: (from libstorage) VolumeRollback newvolume@newsnap success 2015-10-08T06:00:43.861918486-04:00 1545fee295afac7fd8e743a2811b3c3f8ad0e027e9ca482695e77ce (from ubuntu:latest) create 2015-10-08T06:00:43.968121844-04:00 1545fee295afac7fd8e743a2811b3c3f8ad0e027e9ca482695e77ce (from ubuntu:latest) attach 2015-10-08T06:00:47.125238229-04:00 newvolume: (from libstorage) Mount success 2015-10-08T06:00:47.126041470-04:00 1545fee295afac7fd8e743a2811b3c3f8ad0e027e9ca482695e77ce (from ubuntu:latest) start 2015-10-08T06:00:47.237933994-04:00 1545fee295afac7fd8e743a2811b3c3f8ad0e027e9ca482695e77ce (from ubuntu:latest) resize 2015-10-08T06:00:52.135643720-04:00 1545fee295afac7fd8e743a2811b3c3f8ad0e027e9ca482695e77ce (from ubuntu:latest) die 2015-10-08T06:00:52.873037212-04:00 newvolume: (from libstorage) Unmount[newvolume] container 1545fee2 success

9 Work in Progress

- New Volume Controller for GlusterFs is being integrated

- Migration is being worked on.

docker dsvolume migrate {--tofar|--tonear} -v|--volume=VOLUME S3OBJECT

- Local volumes needs to use thinpools on dm. Refer to convoy. https://github.com/rancher/convoy/blob/master/docs/devicemapper.md

10 Related Technologies

This section describes and tracks all related technologies for cloud container management

10.1 Kubernetes vs Docker Compose

Kubernetes in short is awesome. Kubernetes design comes with great design fundamentals based on Google’s decade long container management framework. Docker Compose is very primitive, understands container lifecycle’s well. But Kubernetes understands application lifecycle over containers better. And we deploy applications and not containers.

10.2 Mesos

Kubernetes connects and understands only containers so far. But there are other workloads like mapreduce, batch processing and MPI cloud applications that do not necessarily fit in the container framework. Mesos is great in this class of applications. It presents a pluggable Frameworks for extending Mesos to any kind of applications. Mesos natively understands docker containerizer. So for managing a datacenter/cloud that can be used for varied application types, Mesos is great.

10.3 Mesos + Docker Engine + Swarm + Docker Compose vs Mesos + Docker Engine + Kubernetes

Swarm is Docker’s way of extending Docker Engine to be cluster aware. Kubernetes is doing this great over plain Docker engine. And as already mentioned Docker Compose is very primitive and is no match for the flexibility of Kubernetes. Mesos + Docker Engine + Kubernetes is Mesosphere. Mesosphere theme would be to provide a consistent Kubernetes like interface to schedule and manage any application class workloads over a cluster.

11 Conclusion

Libstorage fundamentals are strong. It can be integrated with Docker Engine as is today. Its functionality will definitely enhance Docker engine capabilities and may be needed with Mesos as well. The community and Mesosphere is driving complete ecosystem over Kubernetes which understands cluster, and brings in the needed functionality inclusive of volume management. The basic architecture treats docker engine as per Node functionality, Kubernetes works over a cluster. But Docker, is extending Libnetwork and has Swarm, that extends Docker engine to be cluster aware. So Libstorage within Docker framework is more suited, than elsewhere.