How CPU helped in the Evolution of Virtualization?

Audio : Listen to This Blog.

Data Center (R)evolution

The 1st decade of the 21st century saw the emergence of many dominating players in the Enterprise data centers. Inheriting from the traditional ideas, players such as GE, IBM, Apple forayed into the arena of datacenters and adjacent technologies. This being more of a disruptive move, was born a new age thought – which is today known as ‘Virtualization’. Coined by VMware in 1998, the same ideology was also worked on by other players such as Citrix (Xen), Microsoft, etc

There is an age old debate whether the software propels the need for hardware innovation or hardware innovation propels a Software innovation. Though most would side with the former, I guess it’s the business or the customer who propels the change and when software/ hardware is in sync with those requirements, changes take place.

Technological changes have not always been ‘disruptive’. The past 3 decades were the decades of “upgrade” and specifically, “non-disruptive” one. When FC-SAN evolved from 2 Gbps to 16 Gbps, or when 32 mbps SCSI evolved to 300 mbps and eventually SAS, that’s now 12G (Serial attached SCSI) or when InfiniBand, FCoE crossed lines, it didn’t change the way applications were deployed or managed. However, in the last couple of years there have been significant changes in the way an infrastructure is deployed and the way it’s managed.

In recent times, there’s been a rapid change in the thought process of every IT Investor or an innovator about the possible changes in storage infrastructure. And this thinking is being influenced with the developments in cloud (Access anywhere, Always available), big data analytics, hyper convergence, etc.

Let’s review one major technology that influenced a change & has contributed significantly to what Virtualization is today.

Virtualization- A Brief History

It was IBM, in the early 60’s, who came up with an innovation to have a time-sharing computer to do away with batch processes in a mainframe computer, in response to a similar solution by GE.

IBM’s CP-67 (CP/CMS) was the first commercial mainframe system that supported virtualization. The approach for this time sharing computer was to divide up the memory and other system resources between users. MultiCS was one such operating system which later on evolved as Unix. In fact, the idea of application virtualization was materialized in Unix OS and pioneering work was done by Sun Microsystems in the early 90’s. In 1987, the SoftPC developed by Insignia solutions was a hardware virtualization software that was able to run DOS on a UNIX box and later Mac on a UNIX box. Connectix’s Virtual PC in 2001 was able to run Windows in Mac environment and was considered as the most optimal host virtualized solution till VMware came up with the ESX/ GSX product series. And that began in 2001 but with a significant breakthrough after 2005.

Till 2005, Virtualization was all about hosting a software on an operating system that can perform device emulations, binary translations, etc. The virtual OS was a guest at the behest of resources enjoyed and controlled by the host operating systems. That was a dead lock condition where the performance of the virtual OS wasn’t reliable or scaling up. And the field of usage of such a software driven OS was significantly small. And that was an era of “Para Virtualization”.

Hardware Assisted Virtualization

Performance was a key challenge for Virtualization to overcome. Para Virtualization failed to evolve to a point where it could find Virtualization a place in enterprises. Imagine a PC/ workstation that had a single CPU, hardly 256 MB of RAM, and barely an NIC trying to run two parallel operating systems. The resource requirement could never be optimally shared, and if shared, it meant the other operating system (host/ guest) had to be put on a freeze mode with no real access to underlying hardware. Even with transactional databases, ERP or webserver workloads, the server OEM could not think of a way in which a virtualized solution/ software could be deployed in servers that were running mission critical applications.

This is despite the fact that the application didn’t fully use the scaled up server hardware resources too efficiently and optimally. VMware wanted to challenge this eco-system by trying to build efficient GSX/ ESX operating systems, but were draining the CPU/ NW resources of the host operating systems. Mostly, the solution was used as a test bed in production support sites to simulate a customer problem running real time applications on a virtual OS. All this compelled a need for processer manufacturers to explore a way, that resource optimization and sharing could be achieved through cost effective Virtualization solutions.

Somehow, Intel or AMD did dare to think of changing the way their Processors (CPUs) handled the access to multiple operating systems hosted on them. They did this by finding a different way to handle privilege/ de-privilege of the operating system(s) in their CPU rings. Thus was born the hardware assisted or CPU assisted Virtualization.

CPU (Processor) is the heart of any computing system. Traditionally, the resource management requests were handled by the kernel of the host operating system (single OS). So, the solution that hardware Virtualization aimed to provide was to find a way that a virtual OS or multiple virtual OS’s can leverage access to a hardware resource as good as the host operating system.

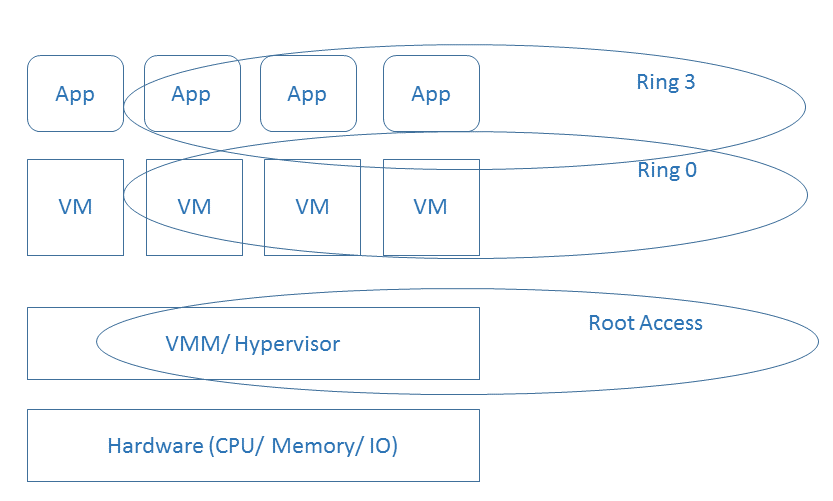

The CPU logically operates different access levels known as “Rings”. Ring 0, being the most privileged and Ring 3 the least. Before hardware/ CPU assisted virtualization, the CPU rings were organized as follows.

- Ring 0- The innermost of operational level of a CPU that which has the root access. OS Kernel accessed this ring.

- Ring 1- OS approved device drivers/ hosted OS’s. Any virtual OS had to reside here.

- Ring 2- Third party device drivers (VM)/ lower privilege drivers.

- Ring 3- User applications (Those that hosted on the host OS as well as the VM)

Intel’s VT-x/ VT-I (2005) & AMD-V (SVM) in 2006 enabled the “Hardware assisted Virtualization” by providing root access to the Virtual Machine Monitor a.k.a Hypervisor (Hypervisor is essentially a Microsoft coined Term). This meant that a VM OS which was traditionally a guest OS became a host which could access the Ring 0 privilege of a CPU getting complete access control to the resources vested to it by the VMM. For e.g. If the VMM decides to allocate 4 cores of CPU, one each of the 4 VM’s, each VM OS can get dedicated access to a single CPU core. This capability meant when we have scalable hosts such as Blade servers, etc. the resource/ application efficiency and performance can be scaled proportionally to the number of CPU cores, Memory, NIC/ IO ports, etc. available in the server/ host. And this opened up many opportunities that ushered the age of a Virtualized Data center where mission critical applications could run on VM’s.

Here’s what changed with Hardware assisted Virtualization:

- Root (New access level) – VMM/ Hypervisor + Memory/ IO Virtualization (resource sharing).

- Ring 0- VM OS.

- Ring 1 – Eliminated/ shadowed.

- Ring 2- Eliminated/ shadowed

- Ring 3- User Applications hosted on the VM’s

Figure 1. Role of CPU in the evolution of Virtualization

Few Major Impacts of the Change

- The operating system runs directly on the hardware using the core of the CPU functionality.

- Reduced/ limited binary translations as the VM OS can own handling of I/O, interrupts, resource requests, etc.

- Elimination of delayed device simulations as VMM allocates a VM OS an isolated (at times dedicated) resource.

- Optimal resource utilization through Network/ Storage/ CPU isolation. Resource lock/ release handled by VMM.

- Enhanced security, availability and reliability through device isolation.

- Scalable hardware and software architecture that enabled VM migration, replication across hosts.

- Hop across OS stacks, VM entry/ exit traverse times completely eliminated, bringing down the I/O latency.

- Possibility of complete server virtualization, the idea that boomed the Hyper Converged storage era.

Conclusion

Hardware assisted Virtualization changed the way Virtualization was perceived and deployed. In the last and the current decade, we have seen an increase in investments, and innovation in enterprises deploying mission critical workloads on Virtual Machines, bringing in enormous savings in TCO/ Opex. The credit for this is attributed to efficient deployment and optimal utilization of server hardware. Since 2005, the Intel VT-x/ AMD-v continues to evolve to match the real time application needs with lower latencies, reduced power consumption and reliable host experience as if enjoyed on a physical infrastructure. The multicore architecture, evolutions in high speed RAMs, TB scale hard-disks, SSD’s etc., enable Virtualization to evolve into software defined data centers/ webscale IT infrastructure, etc. How many wonder that one powerful innovation in CPU Architecture could prove to be a significant catalyst in redefining Virtualization and the way data centers are deployed and managed?